BigQuery table labels

How to work with BigQuery table labels as easy using SQL

BigQuery is amazing service but let’s not forget about some important limitations. Especially if we need to store some metadata about our data warehouse jobs, their results etc.

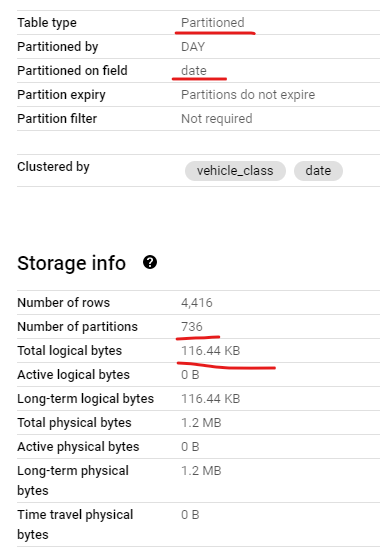

Imagine we have small data warehouse with 64 (yes, binary-like number 😉) batch jobs, need to be run on regular basis, e.g. hourly. If we don’t want to read source tables fully for each run then we need to store somewhere at least beginning time of last batch job execution and use that value to filter out already processed records and avoid unnecessary data reads. Where to store such information? Sounds logical, to have single common table for such purpose, innit? It’ll be containing meta-information for all our batch jobs. So we need to upsert 64 values every hour. Thus our table will be changed 1536 times per day. Not so frequent but let’s look in BigQuery documentation:

As you can see, even for our small data warehouse we need to modify table with meta-information 1536 times per day but BigQuery allows only 1500 modifications! Thus approach with single meta-table doesn’t fit our requirements. And what if we have a little bit bigger solution? For example 256 jobs need to be run each 15 minutes. Certainly we can create one meta-table per each job. However, what if we have 500 jobs? 1000 jobs? How to manage all those tables, how to ensure data and metadata consistency? Such approach become much more complex than we expect.

The idea: BigQuery table labels can be life saver to store such meta-information.

If we use such approach then we store any table-related information in that particular table’s metadata (please bear in mind requirements for labels).

However, if you try to do smth. with labels then you realize soon that out-of-the-box SQL interface to work with table labels is poor. This is why I created several stored procedures to make label-related work much easier. Those procedures are segregated in three different categories:

Utility

- parse_table_name — properly split given table name into dataset path and table name. Do not work for temp tables’ names

- parse_and_validate_table_name — same as above + validatie whether given table exists on particular environment. Works even for temporary tables

Getters

- get_all_table_labels — returns array which cosists of all label names and values from given table. If there is no label associated with given table then empty array will be returned back

- get_many_table_labels — same as above but for particular label names. If given label doesn’t exist then procedure won’t return it

- get_table_label — returns value for particular label from given table. If label doesn’t exist then NULL will be returned back

Setters

- set_table_label — put given value into particular label. If label doesn’t exist then it will be created

- set_many_table_labels — same as above for several labels

- remove_table_label — remove particular label from given table

All setters work with only given set of lables and do not touch any other table label and/or option.

Code for procedures is written using “DNRY” principle, e.g. remove_table_label procedure calls set_table_label. And set_table_label procedure calls set_many_table_labels, where the main logic is placed.

Here is the simple script how to work with procedures. I used US-based poc_us dataset to create table and US-based procedures. Please change it by your own dataset and region (if required)

declare label_value string default null;

create or replace table poc_us.test_table_labels as select 1 col1;

call `xemuliam-bigquery`.US.set_many_table_labels('poc_us.test_table_labels', [

('label1', 'value1'),

('bla-bla-label', 'bla-bla-value')

]);

call `xemuliam-bigquery`.US.get_table_label(

'poc_us.test_table_labels',

'bla-bla-label',

label_value

);

select label_value;Where to find the code and procedures?

Code for those procedures is available on GitHub and procedures itselves can be found in xemuliam-bigquery project.

Whereas BigQuery disallows to mix EU and US data and procedures’ calls, I put the same procedures into EU- and US-based datasets.

To add above project in your BigQuery project list please proceed for following steps:

1. Click ADD button in your BigQuery explorer

2. Choose “Star project by name”

3. Enter xemuliam-bigquery and click STAR

4. Voila! It’s in your projects list

Enjoy! 😀

Additional information can be found in comments

My other BigQuery articles: